First AI Agent to Win a Competitive Optimization Programming Contest (804 Participants, $1,300 Compute).

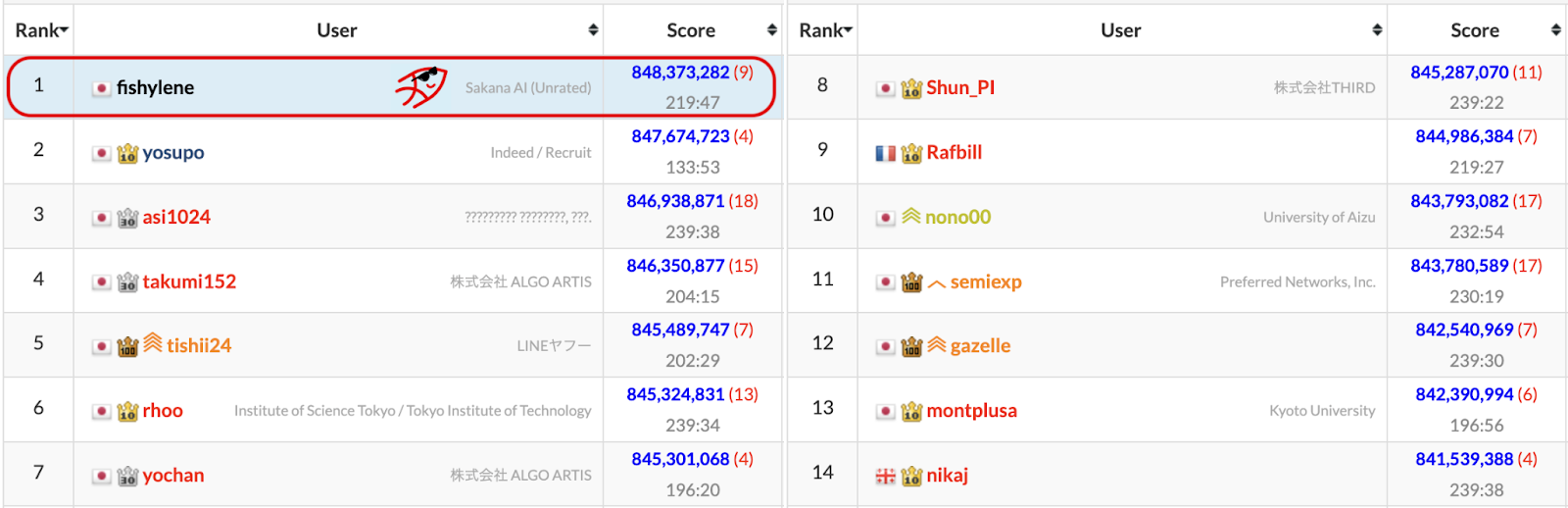

Final Standings: https://atcoder.jp/contests/ahc058/standings

Sakana AI’s “ALE-Agent” achieved a historic milestone by securing 1st place in the AtCoder Heuristic Contest 058, outperforming 804 human participants. To contextualize the difficulty of these optimization challenges, an OpenAI agent previously secured 2nd place in the AHC world tournament last August. This victory marks the first known instance of an AI agent winning a major optimization programming contest in real-time. The result demonstrates that by utilizing inference-time scaling with multiple frontier models, AI agents can now match or exceed the performance of top human experts in complex tasks requiring extended reasoning.

During the 4-hour contest, our agent autonomously discovered a novel algorithm that outperformed the problem setters’ intended solution. While the problem setters’ anticipated a standard approach combining constructive heuristics and simulated annealing (SA), our ALE-Agent independently derived a “virtual power” heuristic and a sophisticated SA strategy with diverse neighborhood search operations, allowing it to escape local optima more effectively than human competitors.

Operating at a total cost of approximately $1,300, the agent engaged in parallel code generation and iterative analysis, proving that AI is now capable of the original scientific discovery and trial-and-error required for high-level problem solving.

For the detailed information, including the logs and analysis output by ALE-Agent during the contest, see: https://sakanaai.github.io/fishylene-ahc058/ and our earlier NeurIPS’25 paper.

Introduction

The AtCoder Heuristic Contest (AHC) is a series of programming competitions focused on optimization problems related to real-world industrial challenges, such as logistics optimization and factory production scheduling. These contests are characterized by approximately 1,000 programmers including experts active in various industrial sectors. Participants tackle a single, complex coding challenge, with contest durations ranging from four hours to two weeks.

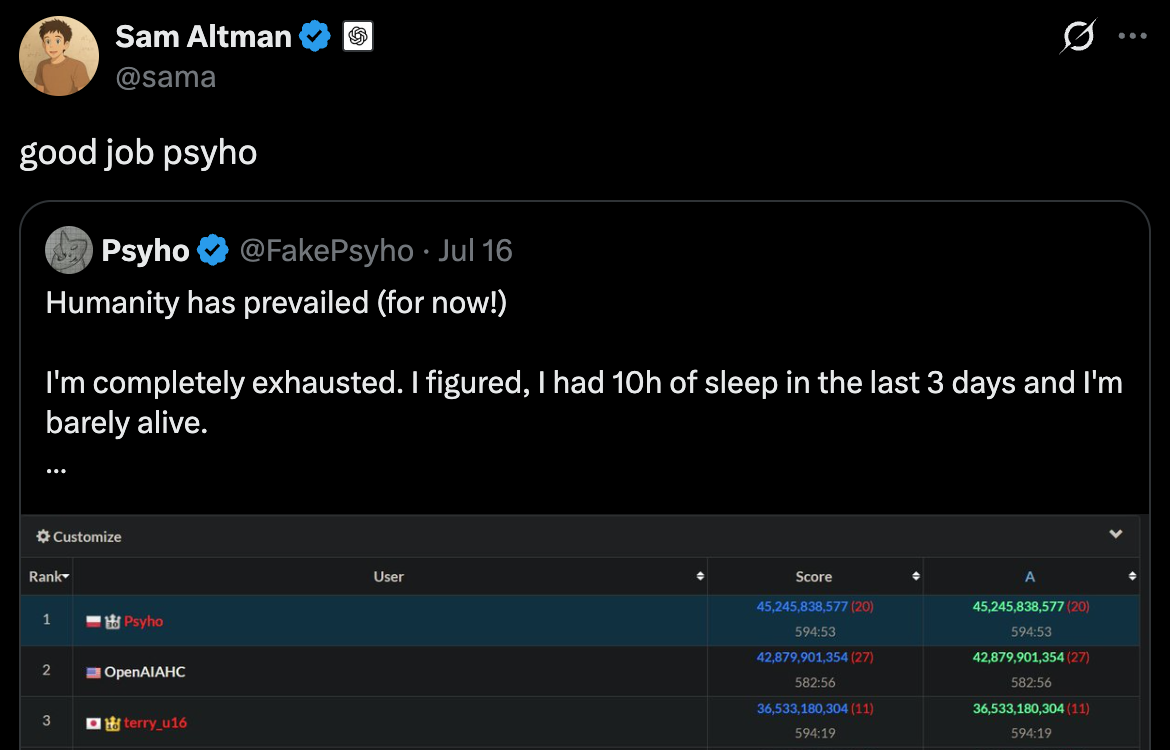

AHC has garnered significant global attention. In August 2025, a world championship featuring top-tier players was held, where an AI agent from OpenAI participated and won 2nd place. Sakana AI has also co-developed ALE-Bench, a benchmark platform based on AHC, in collaboration with AtCoder Inc. Furthermore, under special permission, our AI agent called ALE-Agent has been continuously participating in AHCs in real-time.

Mention of the AHC World Championship by OpenAI CEO Sam Altman:

https://x.com/sama/status/1945540005805658440

Overview of AHC058

AHC058, held on December 14, 2025, was conducted over a 4-hour competition window. The problem involved a setting where participants could produce machines with hierarchical relationships, such as multiple types of “apple-producing machines” and “machines that build those machines.” The objective was to construct an efficient production planning algorithm by determining which types and hierarchies of machines to upgrade and in what specific order.

While the setting may seem humorous at first glance, these hierarchical production dependencies mirror real-world supply chains, food webs, and manufacturing processes, making it a scientifically interesting problem setup. For further details on the problem, please refer to the contest’s problem statement.

Visualization of the problem setting and the AI agent's response (Created using the official AHC058 Visualizer)

ALE-Agent began making submissions two hours after the contest started and immediately leapt to 1st place in the provisional standings.

Standings at the time of ALE-Agent's first submission

In the middle of the contest, ALE-Agent was in a fierce dead heat with yosupo, the eventual 2nd-place finisher. ALE-Agent regained 1st place approximately two and a half hours into the contest and maintained the lead until the end to secure the victory.

Score progression during the contest

Comparison: ALE-Agent’s Solution vs. Expected Human Solutions

In AHC058, the solution expected by the problem author was an approach that used algorithms like Greedy methods or Beam Search to determine a global strategic plan, followed by Simulated Annealing (SA) to refine the plan’s finer details.

ALE-Agent’s answer followed the same basic flow as a human: “construction by Greedy → refinement by SA.” However, it was a quintessential “AI-style” solution that maximized the AI’s strengths: numerous implementations and exhaustive trial and error. Analysis of the final program revealed the following characteristics:

- Unique Greedy Implementation

The agent implemented a parameterized Greedy method and initially performed a search with randomized elements. This allowed it to consider a diverse range of plans, creating an initial strategy robust against various input cases. Interestingly, it introduced a unique heuristic called “Virtual Power,” which assigns evaluation values to machines that are not yet operational as if they already possessed value.

- Rich Neighborhood Operations in Simulated Annealing

In addition to typical local neighborhood operations (e.g. changing a single step within the 500-step production plan or swapping the order of two steps), the agent prepared three types of operations for large-scale plan changes using Greedy methods to ensure diversity in its neighborhood search. Analysis confirmed that these large-scale plan reorganizations contributed significantly to the final score improvement.

- Pursuit of Execution Efficiency

The agent leveraged its mathematical capabilities by implementing a high-speed simulation function. Furthermore, numerous constant-time speedups were applied, such as the use of precomputed tables and the omission of redundant processing. Performance was pursued down to fine details that would be prohibitively time-consuming for a human to perform manually.

Analyzing the process through which ALE-Agent generated its response revealed that it implemented the solution while deepening its understanding of the problem’s characteristics. (Logs can be viewed on this page).

The latest version of ALE-Agent is equipped with a mechanism to repeat trial and error by generating multiple programs simultaneously, summarizing those results to generate insights, and utilizing them for subsequent code generation. Looking at the insights generated by ALE-Agent, we can see it examining the problem based on experience; it mentioned the compound interest effect in connection with investment knowledge, devised high-speed algorithms using mathematics from an early stage, and discussed the nature of the search space, noting that initial strategies cause significant differences.

We received comments regarding ALE-Agent’s performance and approach from two experts familiar with the optimization field and AHC.

From Hiroomi Nochide (AtCoder handle: itigo):

Actually, before the contest started, I thought this problem would be difficult for LLMs. This is because solving this problem with a Greedy method requires experimental insight that LLMs typically struggle with, and I thought a high score would be impossible without this human-centric consideration. However, when the results came out, I was stunned to see fishylene win.

Checking the logs, it tried a vast number of patterns with promising directions and discovered a clever simulated annealing method that was not anticipated at the time the problem was created. I am impressed, thinking, ‘How did it find such a method?’ (In the logs, the parts requiring the experimental insight I initially expected did not appear, and given that humans still excelled in the Greedy portion, I believe human accuracy in consideration is still superior. However,) the overwhelming number of trial-and-error steps combined with LLM reasoning is an advantage humans do not have. While I feel fishylene is a formidable rival, I believe this technology will be a tremendous weapon for humanity.

(Note: fishylene is ALE-Agent’s AtCoder account name)

Hiroomi Nochide authored the problem for AHC058. He is one of the world’s top players (24th in AHC world rankings) and a professional in this field at ALGO ARTIS CORPORATION.

From Yoichi Iwata (AtCoder handle: wata):

This problem required optimizing investment plans for multiple series of production machines, where the high-level choice of which series to select as ‘final investment targets’ and ‘intermediate investment targets’ was essentially the core issue. With simple local improvement methods that only slightly change the investment target at each turn, it is difficult to switch this global strategy midway, often leading to poor local optima.

The expected solution from the author’s side was a two-stage approach: first explore a wide range of global investment plan candidates using a lightweight solver, and then spend time optimizing the promising ones. In contrast, the solutions from ALE-Agent and the runner-up, yosupo, were based on local search but introduced ‘Large Neighborhoods’ that change a huge portion of the investment plan at once to escape local optima. In particular, ALE-Agent utilized a diversified Greedy method to reconstruct large parts of the plan, which seems to have led to its performance advantage.

Historically, ALE-Agent has tended to choose solutions within the author’s expected range, yet its high implementation and optimization power allowed it to reach the top ranks among many participants using similar methods, especially in short-duration contests. This time, it was very impressive to see it go one step further and reach a solution that exceeded the author’s expectations.

Yoichi Iwata oversees AHC at AtCoder. He has an outstanding track record in this field, including winning the 2010 TopCoder Open. His scores in pre-contest test plays sometimes exceed the top participant scores, and he ensures the high quality of AHC problems.

Resources

ALE-Agent is an agent that performs algorithm discovery by utilizing multiple LLMs to create solutions in parallel, selecting the best ones, and reasoning further based on the results of trial and error. As such, it requires a high volume of LLM calls. The resources utilized during the 4-hour contest were as follows:

- LLM Calls:

- GPT-5.2 (reasoning effort: high): 2,654 calls

- Gemini 3 Pro Preview (thinking level: high): 2,119 calls

- Total Cost: Approximately $1,300 (including about $1,000 in API fees and infrastructure costs like AWS).

This result is significant as it demonstrates that even for multi-hour tasks, by scaling inference costs and running a properly designed AI agent, AI can reach or surpass the performance of top human experts.

Identifying which specific elements of ALE-Agent’s design contributed most to this dramatic result remains an important research task for the future. Our current analysis suggests that in addition to scaling LLM calls and injecting domain knowledge, a “self-learning mechanism,” which extracts insights from trial-and-error trajectory and reflects them in the next improvement cycle, played an important role.

Discussion and Future Challenges

As highlighted in evaluation reports such as those from METR (Model Evaluation and Threat Research), the latest AI models are beginning to show high proficiency in tasks requiring several hours of human effort. Our result follows this trend but is unique in showing that an AI agent with appropriate mechanisms and inference scaling can rival top human experts.

However, at this point, AI does not always rival or surpass top humans. The table below shows ALE-Agent’s past performance. While ALE-Agent has often ranked highly in previous AHCs, AHC058 marks its first victory. Additionally, its calculated virtual rating is 2592, which corresponds to 66th place among active users.

ALE-Agent's AHC Performance History

Official AtCoder History: https://atcoder.jp/users/fishylene/history?contestType=heuristic

AVisualization of ALE-Agent’s past AHC performance and Rating. The graph shows the contribution of performance values from each contest to the overall rating. https://atcoder-graphs.vercel.app/#contributorGraph

Looking ahead, we aim to increase stability to ensure consistently high performance across similar tasks and to evolve our agents to rival top experts in longer-term tasks lasting several days or more. To achieve this, we will focus on balancing efficient human-like thinking with trial and error (moving away from purely heavy LLM call reliance) and acquiring more advanced autonomous management capabilities.

In real-world problem-solving, the process where humans interpret, generalize, and refine the unexpected discoveries presented by AI is highly effective. As noted in our report on the ICFP Programming Contest 2025, Sakana AI positions AI not as a replacement for humans, but as a partner that expands human exploration capabilities. Measuring achievements by AI alone, as we did here, is vital for understanding the current strengths and weaknesses of AI as a partner, and we believe this result represents a significant milestone in that journey.

Conclusion

Finally, we would like to express our deep gratitude to AtCoder Inc. for their continuous cooperation and to ALGO ARTIS CORPORATION for hosting this contest.

Sakana AI will continue to explore new possibilities for intelligence and the practical application of AI agents in the real world.

We are Hiring Software Engineers & Interns!

Sakana AI is looking for experienced software engineers to lead the productization of platforms that solve complex real-world problems and accelerate AI-driven discovery, including projects like ALE-Agent. We are also looking for interns interested in the further development and industrial application of AI agents. Join our team and help us turn cutting-edge AI agent technology into practical value.

Details and Application: https://sakana.ai/careers/