At Sakana AI we decided to rethink an important feature at the heart of cognition: time. The Continuous Thought Machine is a new kind of artificial neural network which uses the synchronization between neuron dynamics to solve tasks.

Summary

Sakana AI is proud to release the Continuous Thought Machine (CTM), an AI model that uniquely uses the synchronization of neuron activity as its core reasoning mechanism, inspired by biological neural networks. Unlike traditional artificial neural networks, the CTM uses timing information at the neuron level that allows for more complex neural behavior and decision-making processes. This innovation enables the model to “think” through problems step-by-step, making its reasoning process interpretable and human-like. Our research demonstrates improvements in both problem-solving capabilities and efficiency across various tasks. The CTM represents a meaningful step toward bridging the gap between artificial and biological neural networks, potentially unlocking new frontiers in AI capabilities.

For further details please read our Interactive Report, technical report and released code.

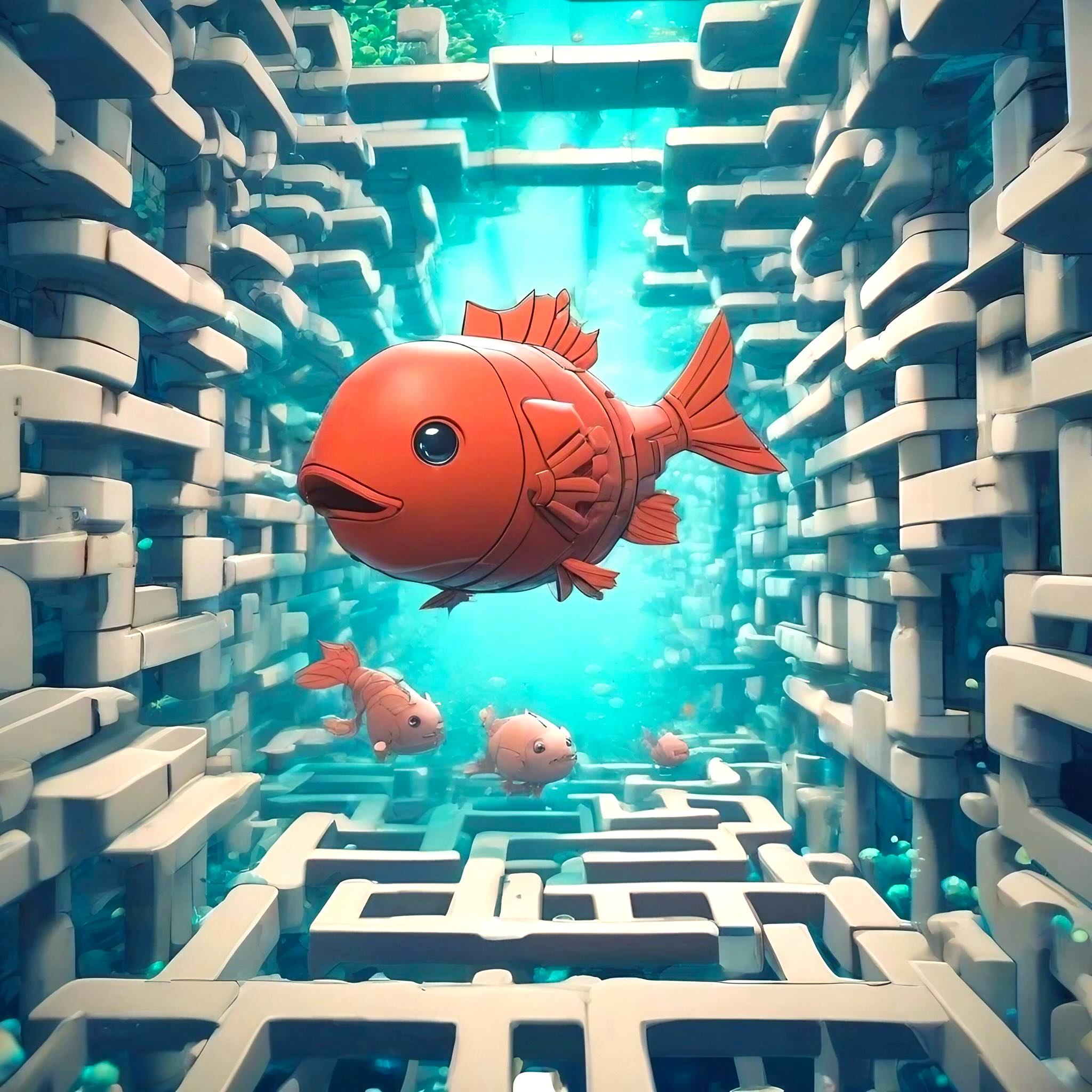

A visualization of the CTM solving mazes and thinking about real photographs (Photo credits: Alon Cassidy). Remarkably, despite not being explicitly designed to do so, the solution it learns on mazes is very interpretable and human-like where we can see it tracing out the path through the maze as it ‘thinks’ about the solution. For real images, there is no explicit incentive to look around, but it does so in an intuitive way.

Introduction

Our brains excel in areas where even the most sophisticated modern AIs struggle, often with far greater efficiency. At Sakana AI we often look to nature for inspiration for how to make progress in AI, for example using evolution to merge models, evolving more efficient memory for language models or exploring the space of artificial life. While artificial neural networks have allowed AI to achieve remarkable feats in recent years, they remain simplified representations of their biological counterparts. Can we unlock new levels of capability and efficiency in AI by incorporating features found in biological brains?

We decided to rethink an important feature at the heart of cognition: time. Despite the significant leap in AI capabilities with the advent of Deep Learning in 2012, the fundamental model of the artificial neuron used in AI models has remained largely unchanged since the 1980s. Researchers still primarily use a single output from a neuron, which represents how the neuron is firing, but neglects the precise timing of when the neuron is firing in relation to other neurons. However, strong evidence suggests that this timing information is vital in biological brains in, for example, spike-timing-dependent plasticity, and is foundational in the function of biological brains.

The method that we used to represent this information in the new model is to simply give a neuron access to its own history of behaviour, and learn how to use this information to calculate its next output rather than just knowing its own current state. This allows it to change its behaviour based on information from different times in the past. Additionally, the primary behaviour of the new model is based on the synchronization between these neurons, meaning that they have to learn to make use of this timing information to coordinate together to solve a task. We argue that this gives rise to a much richer space of dynamics and different task-solving behaviour than what we observe in contemporary models.

After adding this timing information, we see a wide range of non-trivial behaviors in a number of tasks. We highlight some results below. We see highly interpretable behavior: when observing images, the CTM carefully moves its gaze around the scene, choosing to focus on the most salient features present and we see increased performance on some tasks. We were particularly surprised by the diversity of behaviour we were seeing in the dynamics of neuron activity.

A sample of the neuron dynamics seen in the CTM, showing how they change with different inputs. The CTM clearly learns to have a very diverse set of neuron behaviours. How each neuron (in random colors) fires with other neurons is what we call synchronization. We measure this and use it as the representation for the CTM.

The new model’s behaviour is based on a new kind of representation: the synchronization between neurons over time. We believe this is much more reminiscent of biological brains, while not a strict emulation. We call the resulting AI model the Continuous Thought Machine (CTM), a model capable of using this new time dimension, rich neuron dynamics and synchronization information to ‘think’ about a task and plan before giving its answers. We use the term ‘Continuous’ in the name because the CTM operates entirely in an internal ‘thinking dimension’ when reasoning. It is asynchronous regarding the data it consumes: it can reason about static data (e.g., images) or sequential data in an identical fashion. We tested this new model on a wide range of tasks and found that it is able to solve diverse problems and often in a very interpretable manner.

The neuron dynamics that we observed are somewhat more reminiscent of the dynamics measured in real brains, as opposed to more traditional artificial neural networks, which show much less diverse behaviour; see a comparison below to the LSTM, a classic AI model. The CTM shows neurons that oscillate at different frequencies and amplitudes. Sometimes different frequencies can be seen in a single neuron and others will only show activity only when solving a task. It is worth stressing that all of these behaviours are completely emergent, were not designed into the model, and appear as a side effect of adding in the timing information and learning to solve the different tasks.

A comparison between the neural dynamics of the CTM alongside the dynamics seen in the current popular artificial neural networks.

Testing the new CTM model architecture

One major advantage of the CTM, since there’s a new time dimension, is that we can observe and visualize how it solves a problem over time. Unlike a traditional AI system that might classify an image in a single pass through the neural network, the CTM can take several steps to ‘think’ about how to solve a task. In order to demonstrate the power and interpretability of the CTM we showcase two tasks below; maze solving and classifying objects in photos. Demonstrations for more tasks can be found in our Interactive Report and academic paper.

Maze Solving

In this task, the CTM is presented with a 2D top-down maze and asked to output the steps required to solve it. This format is particularly challenging as the model must build an understanding of the maze structure and plan a solution, rather than simply outputting a visual representation of the path. The CTM’s internal continuous ‘thinking steps’ allow it to develop a plan, and we can visualize which parts of the maze it focuses on during each thinking step. Remarkably, the CTM learns a very human-like approach to solving mazes—we can actually see it following the path through the maze in its attention patterns.

The CTM solves Mazes by observing (using attention) and producing steps (e.g., go Left, Right, etc) directly. It does this directly using the synchronization of neural dynamics (i.e., using a linear probe off of synchronization itself). Note how the attention pattern follows the route through the maze: a very interpretable approach. Please explore the interactive version of the maze solving visualization in our Interactive Report.

What’s particularly impressive about this behavior is that it emerges naturally from the model’s architecture. We don’t explicitly design the CTM to trace paths through mazes—it develops this approach itself through learning. Additionally, we found that when allowed more thinking steps, the CTM continues following the path, past the point that it was trained to do so, showing that it indeed had learned a general solution to this problem.

Image Recognition

ImageNet is the classic image classification benchmark that sparked the deep learning revolution in 2012. Traditional image recognition systems make classification decisions in a single step, but the CTM takes multiple steps to examine different parts of the image before making its decision. This step-by-step approach not only makes the AI’s behavior more interpretable but also improves accuracy: the longer it “thinks,” the more accurate its answers become. We also found that this allows the CTM to decide to spend less time thinking on simpler images, thus saving energy. When identifying a gorilla, for example, the CTM’s attention moves from eyes to nose to mouth in a pattern remarkably similar to human visual attention.

Here we see examples of the behavior of the CTM when classifying images (Photo credits: Alon Cassidy). The heat maps show where the CTM is directing its attention when processing the image, the arrows show the center of the attention. Many more examples can be seen in the Interactive Report.

These attention patterns provide a window into the model’s reasoning process, showing us which features it finds most relevant for classification. This interpretability is valuable not just for understanding the model’s decisions, but potentially for identifying and addressing biases or failure modes.

Conclusion

Despite modern AI being based on the brain as ‘artificial neural networks’, the overlap between AI research and neuroscience is surprisingly thin even today. AI researchers choose to stay with the very simple model developed in the 80s due to its simplicity, efficient training and continuing success in driving forward progress in AI. Neuroscience on the other hand, will create much more accurate models of the brain but primarily for the purpose of understanding the brain rather than attempting to create superior models of intelligence (though, of course, one could lead to the other). These neuroscience models, despite their added complexity, usually still underperform our current state-of-the-art AI models, thus perhaps not making them particularly compelling to investigate further for AI applications.

Despite this, we believe that not continuing to push modern AI closer to how the brain works in some aspects is a missed opportunity, and we might find much more capable and efficient models this way. The massive jump in capabilities in 2012, the so-called “deep learning revolution”, occurred because of neural networks, a model inspired by the brain. Should we not continue to be inspired by the brain in order to continue this progress? The CTM is our first attempt at trying to bridge the gap between these two fields in a way that shows some initial hints of more brain-like behaviour while still being a practical AI model for solving important problems.

We’re excited to continue pushing our models in this nature-inspired direction and exploring what new capabilities might emerge. For more detailed examples of the CTM’s behavior across different tasks, please visit our Interactive Report. Complete details about the CTM’s architecture and implementation can be found in our technical report and code.

As we move forward, we invite the AI and neuroscience communities to join us in exploring this promising intersection of biology and computation. Together, we can develop AI systems that better capture the remarkable capabilities of biological intelligence while maintaining the practical advantages of artificial neural networks.

Sakana AI

Interested in joining us?

Please see our career opportunities for more information.